Research

Research here exists to reduce uncertainty in complex systems before decisions are deployed at scale. We investigate the fundamental mechanics of intelligence, governance, and reliability.

Philosophy

How we approach truth.

Evidence Over Assumptions

We do not accept "best practices" without empirical validation. Every architectural decision is treated as a hypothesis to be tested against reality.

Reproducibility

A result that cannot be reproduced is not a result; it is an anecdote. We build systems that are deterministic and observable by design.

Trade-off Analysis

There are no solutions, only trade-offs. We explicitly document what we are optimizing for and, more importantly, what we are sacrificing.

Long-term Validation

We prioritize durability over novelty. Our research timeline is measured in years, ensuring that our systems stand the test of operational scale.

Core Domains

We define strict boundaries for our research to ensure depth and rigor. We do not research everything; we research what matters to the enterprise.

Applied AI & Decision Systems

Investigating the bridge between probabilistic models and deterministic business logic.

System Architecture & Reliability

Designing distributed systems that maintain coherence under partial failure.

Human-AI Interaction

Defining the interfaces where human intent meets machine execution.

Governance, Risk & Accountability

Codifying policy into infrastructure to ensure compliance by default.

Data Integrity & Evaluation

Ensuring the epistemological soundness of the data feeding our systems.

Active Programs

Work in progress. These are the questions we are currently asking and the hypotheses we are testing.

Autonomous Agent Reliability Framework

[Validating]Developing a standardized methodology for measuring and mitigating hallucination rates in agentic workflows.

Semantic Governance Protocols

[Exploring]Investigating the use of vector embeddings to enforce policy constraints on unstructured data streams.

Zero-Trust Architecture for LLMs

[Publishing]Defining architectural patterns for securing enterprise data when integrating with external foundation models.

Cognitive Load in Human-AI Handoffs

[Investigating]Quantifying the mental effort required for operators to regain context during automated system failures.

Published Work

Tangible proof of our thinking. We publish to contribute to the broader engineering community and to subject our ideas to public scrutiny.

The Architecture of Immutable Intelligence

A whitepaper proposing a new architectural standard for enterprise AI systems that prioritizes reproducibility and auditability over raw performance.

Governance as Code: Implementing Policy at the Compiler Level

Methodologies for translating human-readable compliance policies into machine-enforceable infrastructure constraints.

Latency vs. Accuracy: Trade-offs in Real-time Decision Engines

An empirical analysis of the performance characteristics of various decision intelligence architectures in high-frequency trading environments.

Failure Modes of Agentic Workflows

A taxonomy of common failure patterns in autonomous agent systems and strategies for designing robust recovery mechanisms.

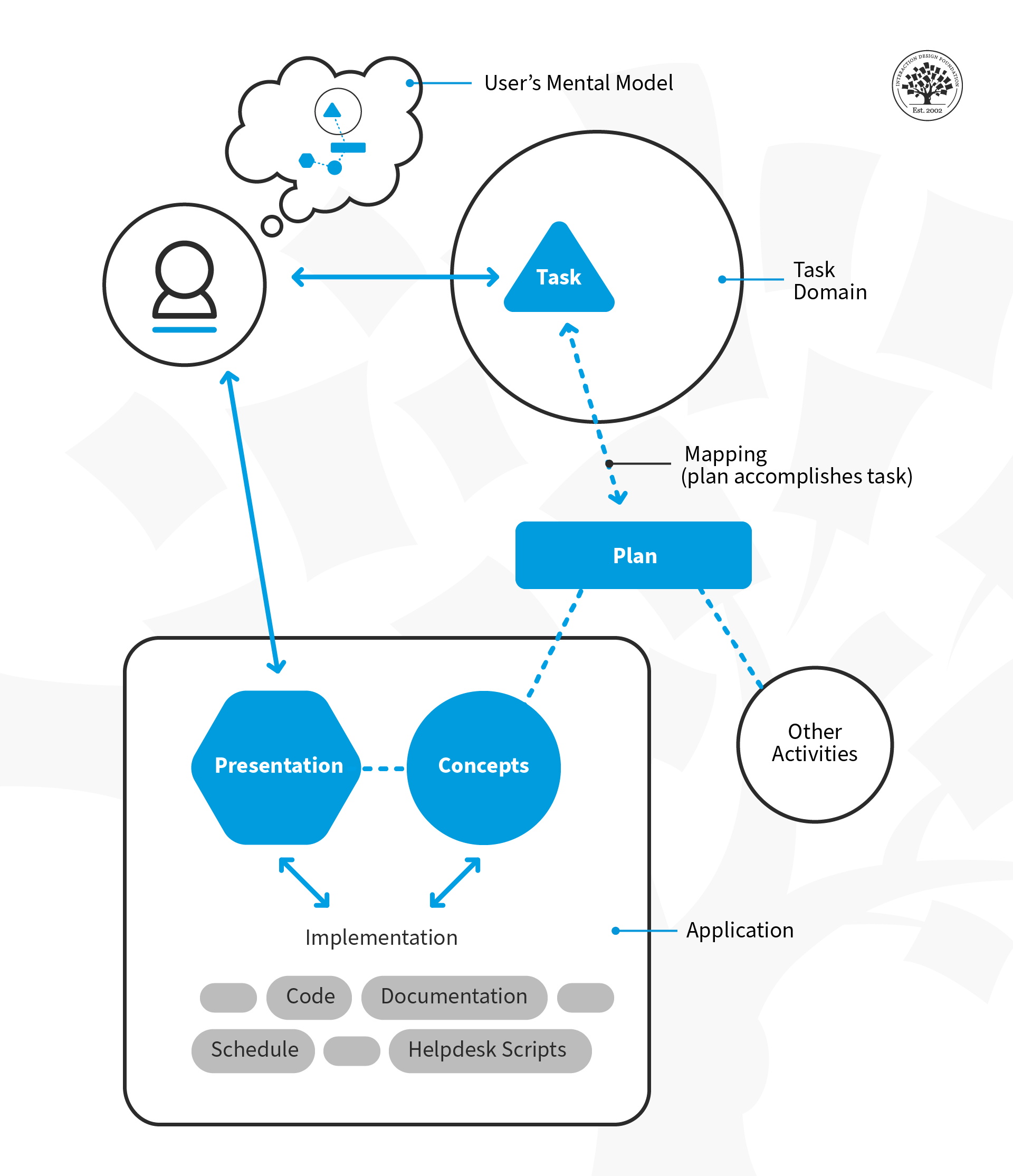

Visual Artifacts

Selected diagrams and models from our recent studies. We use visuals to clarify complex relationships, not to decorate.

Figure 1: Layered System Architecture for Distributed Intelligence

Figure 2: Methodological Framework for Sampling Rates

Figure 3: Mental Models in Human-AI Interaction

Figure 4: Conceptual Modeling of Enterprise Risk

Integrity & Governance

Trust is the currency of research. We maintain strict governance protocols to ensure the validity and safety of our work.

Internal Review

All research outcomes are subject to rigorous internal peer review before being used to inform product decisions or public statements.

Responsible Disclosure

We adhere to standard responsible disclosure protocols when identifying vulnerabilities in external systems or foundation models.

Ethical Boundaries

We maintain clear ethical guidelines regarding data privacy, algorithmic bias, and the potential dual-use nature of AI technologies.

Conflict of Interest

Our research team maintains operational independence from our sales and marketing functions to preserve objectivity.

Collaboration

We collaborate selectively with academic institutions, industry labs, and practitioners who share our commitment to rigorous, long-term systems research.

Contact Research Team→